From the DC 10 to the Boeing 737 MAX

How We Handle Aviation Disasters

At the Paris airport on March 3, 1974, Turkish Airlines flight 981 was waiting at the gate. Captain Nejat Berkoz is waiting. What was usually an underbooked flight from Paris to London, part of a two leg journey originating in Istanbul, was packed full with 335 passengers. A British Airlines strike had forced many people wanting to return home to the UK to rebook their flights. It also meant that Berkoz had to wait an additional 30 minutes.

During that 30 minutes, the French ground crew had finished loading all the new bags into the aft cargo hold of the DC-10. The DC-10 was only a few years old at this point. It was McDonnell Douglas Aircraft Company’s jumbo jet model, made to compete with Boeing's famous 747. When the ground crew closed the cargo door, one of them pushed down on the locking handle. He noted in the safety report that he required no extra force to secure the door. All seemed well.

Unfortunately, he missed something important. On the door he closed there was a sign warning ground crew to check through a small viewing window to ensure the locking mechanism was truly secure. But that sign was only in English and Turkish, so the French crewman had no idea.

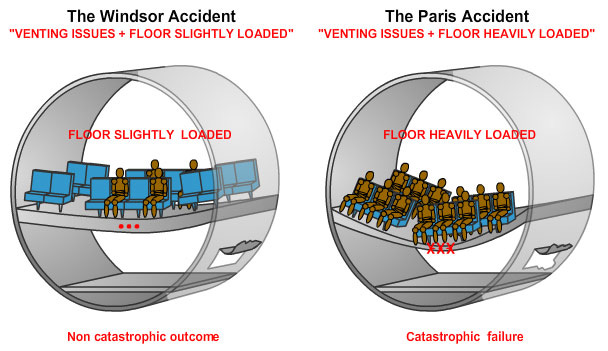

Neither did Berkoz, as he finally taxied the aircraft down the runway at 12:32PM. As the plane gained altitude the cabin remained pressurized as the pressure of the air outside began to drop. The pressure difference grew until until the aft cargo door burst off of the DC-10. Once the pressure between the cargo bay and the outside equalized, this pressure differential, combined with the weight of the completely full aircraft, caused the cabin floor to collapse. The aircraft controls and the controls for the tail engine all ran through the cabin floor, and so were severed. With no control, and the plain falling apart, Berkoz stood no chance at recovering the aircraft. Despite his over 7000 hours of flight experience, the plane collided into the ground at 487mph (783km/h). Crashes at such speeds are unsurvivable.

Turkish Airlines flight 981 demonstrates the degree to which everything on an airplane must operate perfectly in order to avoid catastrophic accidents. A single error in the design of the DC-10 caused what was, at the time, the single most deadly air travel disaster to have occurred. The aft cargo door was designed to open outward in order to optimize cargo room, but that left it prone to failure due to the pressure differential between the inside and the outside of the aircraft. The floor did not have vents to help equalize the pressure in the cabin, nor supports to keep it intact in the event of depressurization while at full capacity. All the controls for the plane’s elevators, rudder, and rear (#2) engine ran through the floor, with no independent backups. So when the locking pins in the door failed, it created a cascade of failures that inevitably resulted in the loss of the plane. Of course, each and every one of these was a design failure, but who could have predicted that they would all interact in such a negative feedback loop?

It turns out, McDonnell Douglas aircraft company and the FAA knew. Two years earlier, American Airlines flight 96 out of Detroit had experienced the exact same problem. Fortunately, in that case the plane had not been full, so the collapse of the cabin floor had been only partial, and the pilots maintained enough control over the aircraft to land in what was still an almost miraculous demonstration of skill.

After the release of the accident report about flight 96, the FAA was preparing to release an airworthiness directive (AD) that would have required the McDonnell Douglas aircraft company to fix the locking mechanism on the cargo door, provide a system for ground crew to verify that the locking pins had successfully engaged the door, add a ventilation system between the cargo bay and the passenger cabin, and to reinforce the section of the floor that held the control cables so that it could not collapse. Such an airworthiness directive would have stopped all flights of DC-10s until these problems had been resolved.

But McDonnell Douglas was concerned that this would be their death knell in the ongoing competition over the new jumbo aircraft. They argued that it would be too much of a burden. So the FAA agreed not to release the AD if McDonnell Douglas would fix the problem. Instead, McDonnell Douglas released three service bulletins. These service bulletins did not have the force of the FAA behind them, but they did encourage airlines to follow them, as they transferred liability for relevant failures from McDonnell Douglas to the airlines. All of them were focused on ensuring the locking mechanisms were engaged. For instance the warning sign the French ground crew couldn’t read and the viewing hole it had instructed him to look through were both added in response to these service bulletins. But they made no service bulletins about venting or reinforcing the cabin floor. Clearly, McDonnell Douglas was too concerned about financial pressures, and not concerned enough about engineering failures of their aircraft.

This case has a lot in common with the troubles at Boeing with the 737 MAX aircraft.

On January 5th, 2024, the Captain and First Officer of the Alaska Airlines flight 1282 were likely discussing some recent issues with the aircraft. They were no doubt double checking the details with one another and confirming their course of action in case of an incident. On the previous three flights, there had been an error with a pressurization controller on the aircraft. Such aircraft have ample backups, and errors don’t usually indicate any serious problems. But Alaska Airlines had, in an abundance of caution, limited the aircraft to overland flights only, so that if it could not maintain adequate pressure, the crew could easily fly it to a nearby airport at low altitude.

Just after five in the afternoon, the airplane took off from Portland, headed for southern California. But it didn’t take long before the plane experienced more than a pressurization error. Six minutes into the flight a door plug next to row 26 on port side was suddenly ripped from the aircraft. As a result, the cabin experienced sudden and uncontrolled depressurization.

On other versions of the 737 MAX 9, that would have been an emergency exit. The FAA requires a certain number of emergency exits based in part on the number of passengers. The 737 MAX 9 could support a variety of seating configurations, some which required additional emergency exits, and some which didn’t. Rather than construct two separate hulls, Boeing included a door plug which they installed instead of a door on models which were configured for fewer passengers. It was this plug which blew off the aircraft. Fortunately, no one was sitting in the seat next to the plug, so while three passengers had to be treated for minor injuries, and all the passengers had to endure what was assuredly a terrifying experience, there were no casualties. The crew descended to under 10 thousand feet for the safety of the passengers, and were able to turn around and land safely back in Portland.

But this hadn’t been the first problem with the 737 MAX. The MAX 8 had suffered two catastrophic failures, Lion Air flight 610 and Ethiopian Airlines flight 302 both of which resulted in the deaths of all onboard. Afterwards it was revealed that these disasters resulted from a faulty flight control system. Intended to make up for the aerodynamically efficient, but unstable, design of the aircraft itself, the flight control had no backups, making it a disaster waiting to happen.

Ironically, many of Boeing’s troubles can be traced back to their acquisition of McDonnell Douglas. Former Douglas CEO Harry Stonecipher took over in 2003 after the merger and made the very intentional decision to change Boeing’s culture to be more focused on costs than good engineering.

“When people say I changed the culture of Boeing, that was the intent, so that it is run like a business rather than a great engineering firm.”

One such change was deciding to retain designs from the 60s and 70s rather than design a new single aisle aircraft. He redirected the saved money into stock buybacks. His successor Jim McNurney opted for the same type of strategy even as Boeing was losing customers to Airbus. He opted to upgrade the 737 design to the 737 MAX over five years rather than design a new aircraft that could really take advantage of all the aviation advancements over the years.

Boeing also began to outsource most of its production, so that Boeing engineers became responsible for mostly just assembly. For instance, Spirit Aerosystems was the company that manufactured the failed door plug.

The overall problem seems to be that, by increasing financial and schedule pressures on the Boeing workforce, and by implementing changes that limited the role of Boeing engineers, Boeing leadership slowly undermined the culture of safety that had been so meticulously built up there. Aviation is a high reliability industry. Despite the setbacks with the 737 MAX, it is still safer to fly than to drive. An amazing achievement, given the complexity of air travel. But the problem with achieving such success with regard to safety, is that it can generate complacency. When the safety record of aviation gets taken for granted, it can be easy to assume that safety just happens. It can be easy to decide to focus on costs without really seeing how the engineering culture actually produces that safety.

But it isn’t just the culture of one company that makes aviation safe. High reliability industries must take a learning oriented approach to safety. Just compare the DC-10 to the Boeing 737 MAX. 50 years ago, depressurizing from the loss of a door resulted in the total loss of the aircraft. Today a plane suffering a similar incident can land safely with no loss of life. The industry and regulators have learned a lot over time. So much that even the devastating failure of safety culture at arguably the most influential aviation company in the world cannot drag us back in time in terms of safety.

And even with the failures at Boeing, aviation has innumerable other checks on safety. For instance, after Alaska Airlines flight 1282, pressure to change started coming not just from regulators, but from Boeing’s biggest customers. The U.S. Department of Transportation has also increased scrutiny over the company, and the FAA has also barred Boeing from expanding its production, and may restrict that production even more until the company can demonstrate real change. I have no faith that a simple executive shakeup will change the culture at Boeing. After the shakeup after the tragic disasters in 2018 and 2019 didn’t prevent further errors with the 737 MAX. But I don’t have to have faith in Boeing executives. What we see in response to Boeing’s fall from grace is a key feature of high reliability: the failure of an organization to adhere to safety standards is tightly coupled to the ability of that organization to operate successfully at all. Boeing is getting closer to an existential crisis: fix its culture or die.

I’m not arguing that those who are concerned about what’s happened with the 737 MAX are wrong. Nor am I saying that we don’t need to put pressure on Boeing. We do. It is that very pressure that prevents other pillars of aviation safety from slipping as well. But what isn’t helpful is the kind of apocalyptic doomerism about air travel that has appeared in the 737 MAX’s wake. Booking flights that don’t use the 737 MAX can help push airlines to take further steps to pressure Boeing. But what does it really do to get worked up that a plane might fall on your house? Why is it that we can’t seem to talk about big problems like this without framing them like existential threats? Is it not enough anymore to just want to hold plane manufacturers accountable for hundreds of lives lost, or do we need the risk of airplanes raining down on our heads? Can we not simply say that no accident should be repeated? Can we not draw the line and say that any crash justifies immediate and serious action to ensure future safety?

When we frame as apocalyptic those challenges which are frankly part and parcel to living in a technological society, we imply that only revolutionary change is a sufficient response. The thing is, air travel, like many technological challenges today, is a highly complex endeavor. There are a lot of uncertainties involved. Rapid, revolutionary change in the way we manage aviation is just as likely as not to undo all of the hard learned lessons that we’ve accumulated over the last half century and more. The fact that air travel has remained safe, even in the face of all the failures by Boeing, is proof to me that what we need is the maintenance of our high reliability systems already in place. We need to take incremental steps to try to make high reliability less demanding, and more likely, not start over from square one.